To our knowledge, this is the first subspace model-based DFO method with global complexity and convergence guarantees. We provide a probabilistic worst-case complexity analysis of RSDFO. At each iteration of RSDFO we select a random low-dimensional subspace, build and minimize a model to compute a step in this space, then change the subspace at the next iteration. To address this, we introduce RSDFO, a scalable algorithmic framework for model-based DFO. There are several settings where scalable DFO algorithms may be useful, such as data assimilation, machine learning, generating adversarial examples for deep neural networks, image analysis, and as a possible proxy for global optimization methods. Existing model-based DFO techniques are primarily designed for small- to medium-scale problems, as the linear algebra cost of each iteration-largely due to the cost of constructing interpolation models-means that their runtime increases rapidly for large problems.

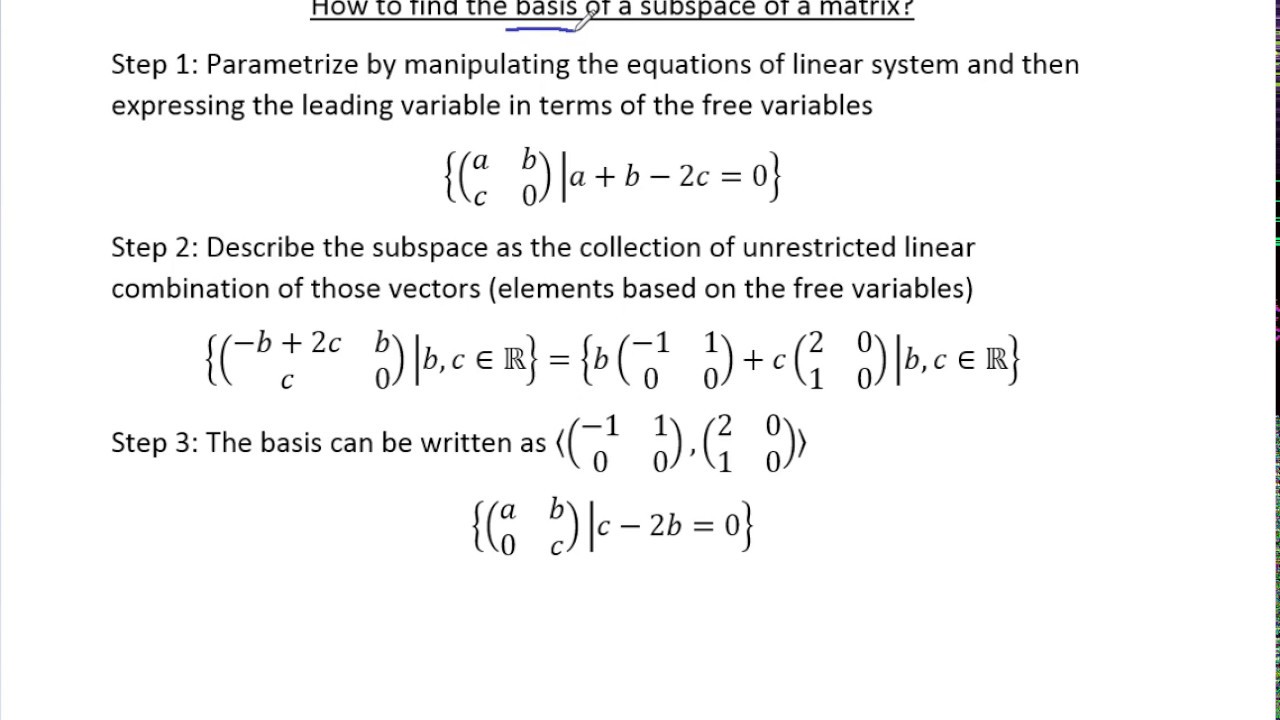

#DIMENSION OF A SUBSPACE DEFINITION HOW TO#

This paper aims to provide a method that attempts to answer a key question regarding model-based DFO: how to improve the scalability of this class.

#DIMENSION OF A SUBSPACE DEFINITION FULL#

We also specialize these methods for nonlinear least-squares problems, by constructing interpolation models for each residual term rather than for the full objective. Here, we consider model-based DFO methods for unconstrained optimization, which are based on iteratively constructing and minimizing interpolation models for the objective. There are many types of DFO methods, such as model-based, direct and pattern search, implicit filtering and others (see for a recent survey), and these techniques have been used in a variety of applications. This topic has received growing attention in recent years, and is primarily used for objectives which are black-box (so analytic derivatives or algorithmic differentiation are not available), and expensive to evaluate or noisy (so finite differencing is impractical or inaccurate). In DFO, we consider problems where derivatives of the objective (and/or constraints) are not available to be evaluated, and we only have access to function values. Extensive numerical results demonstrate that DFBGN has improved scalability, yielding strong performance on large-scale nonlinear least-squares problems.Īn important class of nonlinear optimization methods is so-called derivative-free optimization (DFO). We outline efficient techniques for selecting the interpolation points and search subspace, yielding an implementation that has a low per-iteration linear algebra cost (linear in the problem dimension) while also achieving fast objective decrease as measured by evaluations. We then describe a practical implementation of this framework, which we call DFBGN.

This method achieves scalability by constructing local linear interpolation models to approximate the Jacobian, and computes new steps at each iteration in a subspace with user-determined dimension. This framework is specialized to nonlinear least-squares problems, with a model-based framework based on the Gauss–Newton method.

We present a probabilistic worst-case complexity analysis for our method, where in particular we prove high-probability bounds on the number of iterations before a given optimality is achieved. We introduce a general framework for large-scale model-based derivative-free optimization based on iterative minimization within random subspaces.

0 kommentar(er)

0 kommentar(er)